- ENIAC (1945)

- EDVAC (1949)

- UNIVAC (1950)

- The Generations of Computers

- First Generation (1940s–1950s)

- Second Generation (1950s–1960s)

- Third Generation (1960s–1970s)

- Fourth Generation (1970s–Present)

- Fifth Generation (Future)

- How Computers Impact Our Lives Today

- Frequently Asked Questions:

- What is the history of computers?

- Who invented the first mechanical computer?

- What are the major eras of computer history?

- Why is the abacus important in computer history?

- What was ENIAC, and why was it important?

- How did Charles Babbage contribute to modern computing?

- What role do modern computers play in everyday life?

- Conclusion

The Electronic Era History began in the 1940s when electricity replaced mechanical operations, enabling computers to perform calculations far faster than their mechanical predecessors. This era introduced machines that could handle large-scale computations and laid the groundwork for the modern digital age.

Read More: https://newsokay.com/essential-components-of-a-computer-network/

ENIAC (1945)

The ENIAC (Electronic Numerical Integrator and Computer) was the first general-purpose electronic computer. Built in 1945, it could solve various mathematical problems simultaneously but required vast amounts of power and space. ENIAC revolutionized computing by demonstrating that machines could automate complex calculations.

EDVAC (1949)

The EDVAC introduced the stored-program concept, allowing computers to store instructions in memory rather than relying solely on manual programming. Completed in 1949, it was more advanced than ENIAC and capable of handling more complex calculations efficiently.

UNIVAC (1950)

UNIVAC, the first electronic computer designed for business applications, emerged in 1950. It could process different types of data, making it valuable for corporations, government agencies, and scientific research.

The Generations of Computers

The evolution of computers is also classified into five generations, each characterized by technological innovations, speed, size, and efficiency. Understanding these generations helps illustrate how computers became smaller, faster, and more intelligent over time.

First Generation (1940s–1950s)

First-generation computers relied on vacuum tubes, which were large, expensive, and prone to overheating. They were extremely slow by modern standards. Examples include ENIAC and UNIVAC. These machines required extensive maintenance and specialized operators.

Second Generation (1950s–1960s)

The introduction of transistors revolutionized computing. Transistors were smaller, faster, and more reliable than vacuum tubes. These computers used assembly language, allowing more efficient programming. A notable example is the IBM 1401, widely used in business and research.

Third Generation (1960s–1970s)

Integrated circuits (ICs) replaced individual transistors, making computers smaller, more powerful, and energy-efficient. This era also introduced keyboards, monitors, and improved software. Notable computers include the IBM 360 and Apple 2C.

Fourth Generation (1970s–Present)

The development of Large-Scale Integration (LSI) and microprocessors marked the fourth generation. Millions of components could fit on a single chip, drastically increasing speed and reducing cost. Examples include Intel 4004 and Intel 8080, which laid the foundation for modern personal computers.

Fifth Generation (Future)

Fifth-generation computers focus on artificial intelligence (AI) and advanced machine learning. These systems aim to mimic human decision-making and problem-solving. While still developing, they promise smarter, more autonomous machines and advanced robotics.

How Computers Impact Our Lives Today

From their humble beginnings as mechanical tools, computers have become indispensable in nearly every aspect of life. We use computers to:

- Learn and research in schools and universities.

- Communicate globally through social media and email.

- Play games, create art, and enjoy entertainment.

- Automate businesses and improve efficiency in industries.

The journey from the abacus to AI-driven machines demonstrates the incredible power of human creativity and innovation.

Frequently Asked Questions:

What is the history of computers?

The history of computers traces the evolution of tools and machines used for calculations and problem-solving—from ancient devices like the abacus to modern AI-driven computers.

Who invented the first mechanical computer?

Charles Babbage is credited with designing the first mechanical computer, known as the Analytical Engine, in 1834. It could perform calculations based on instructions, a concept that paved the way for modern computing.

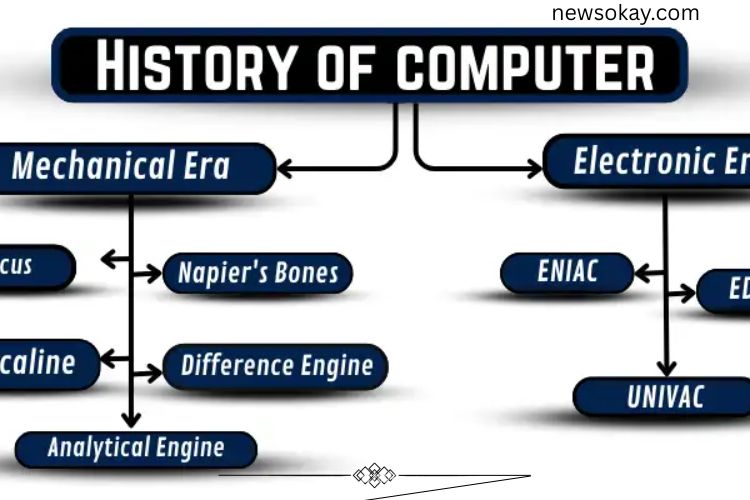

What are the major eras of computer history?

Computer history is divided into two main eras: the Mechanical Era, featuring devices like the abacus and Pascaline, and the Electronic Era, which introduced electricity-powered computers like ENIAC and UNIVAC.

Why is the abacus important in computer history?

The abacus, invented around 300 BC, was the first tool to perform systematic calculations. It laid the foundation for mechanical computing and taught basic math skills for centuries.

What was ENIAC, and why was it important?

ENIAC (Electronic Numerical Integrator and Computer), built in 1945, was the first general-purpose electronic computer. It could perform complex calculations faster than any mechanical device, marking a breakthrough in computing.

How did Charles Babbage contribute to modern computing?

Babbage designed the Difference Engine and the Analytical Engine, introducing concepts like programmable instructions, arithmetic units, and control units. These ideas are the backbone of today’s computers.

What role do modern computers play in everyday life?

Today, computers are used in education, communication, entertainment, business, and scientific research. They simplify complex tasks, connect people worldwide, and drive innovation across all industries.

Conclusion

The journey of computers is a remarkable story of human innovation and determination. From the simple abacus to the revolutionary Analytical Engine, and from room-sized electronic machines like ENIAC to today’s AI-powered systems, computers have continually transformed how we live, work, and communicate. Understanding their history not only highlights the incredible progress of technology but also inspires curiosity about the future. For beginners, exploring this journey offers valuable insights into the foundations of modern computing and the limitless possibilities that lie ahead.